Most music is based on the harmonic series. It is a very simple progression of frequencies which sound good together. For example, let's say a note is played at 1000 Hz. From there, the harmonic series goes up:

- 1000 Hz

- 2000 Hz

- 3000 Hz

- 4000 Hz

- 5000 Hz

- ...

We can also go down instead of up...

- 1000 Hz

- 500 Hz

- 333 Hz

- 250 Hz

- 200 Hz

- ...

And that's pretty much all we need to know to build a musical scale.

Here's what happens if we start at an arbitrary note and then apply the harmonic series. The algorithm used is very simple:

- Start with one frequency. Make a dot on the keyboard where that frequency is.

- For each harmonic we care about, and each frequency we've looked at so far, do the following:

- Multiply or divide that frequency by a simple number, like 2 or 3 or 1/2 or 1/3.

- Make a dot on the keyboard where that frequency would be.

- Add this frequency to the list, then move down one row and go back for another iteration.

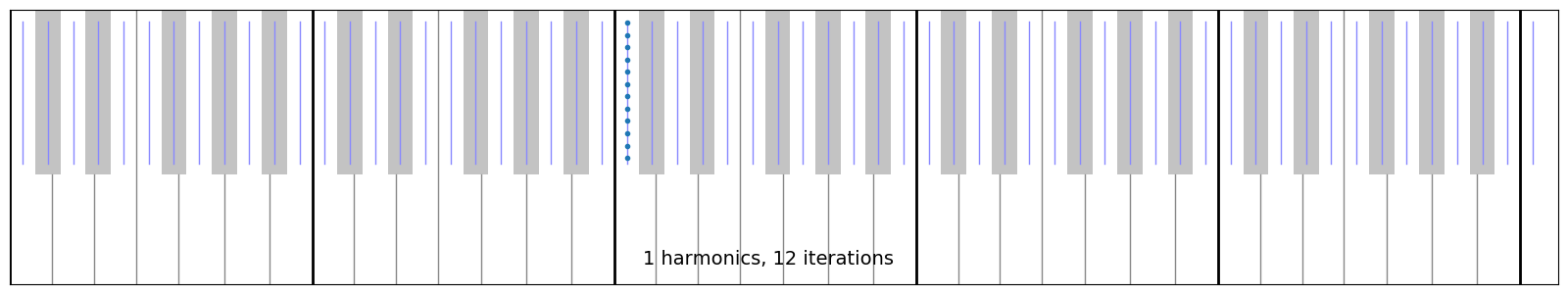

Here's how it looks when we only use "1" as a ratio. There's only one note:

(each dot is one iteration, going from top to bottom)

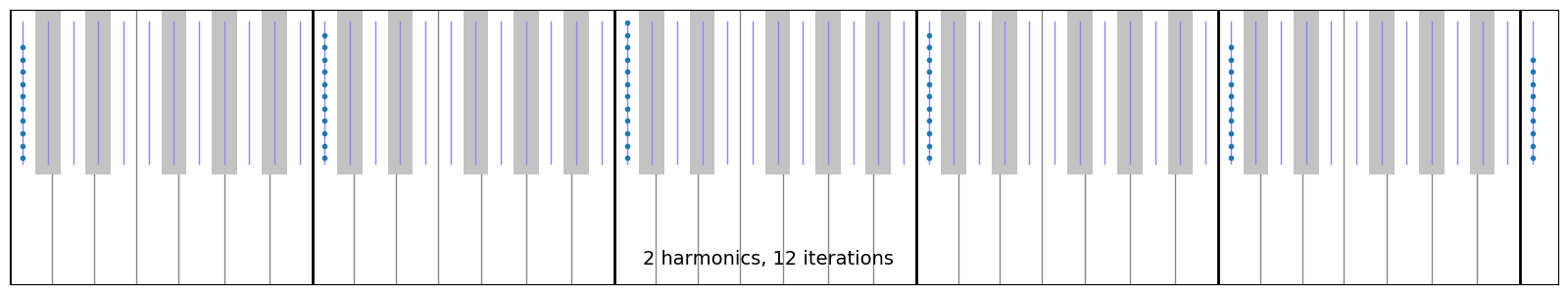

If we add in another harmonic, the ratios are 1/2, 1, and 2.

This gives us the original frequency plus all the different octaves.

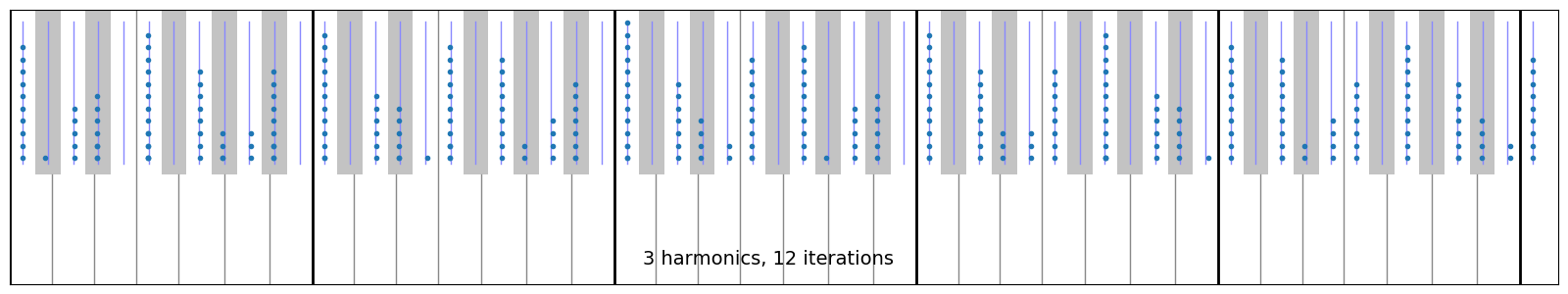

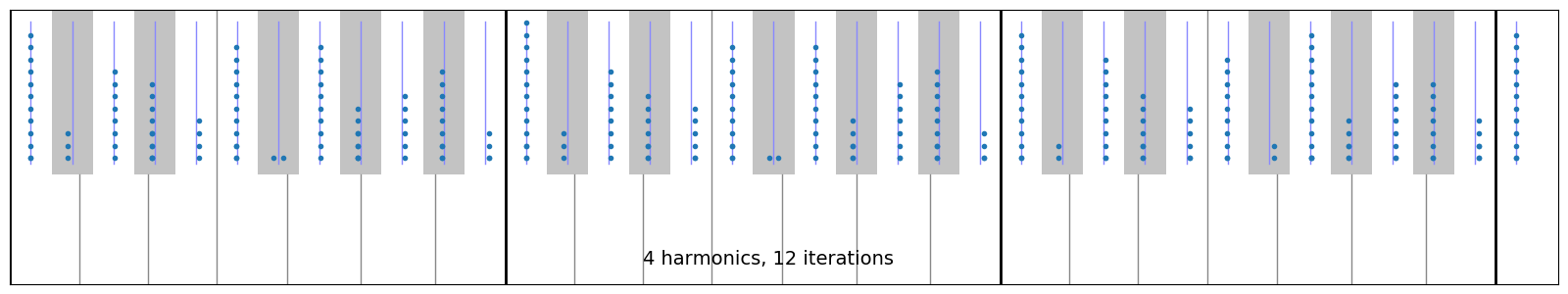

Add one more harmonic, and the ratios are 1/3, 1/2, 1, 2, and 3.

At first it gives us just the original note, the octaves, and the extra note in a "power chord" like what people play in heavy metal. That's 7 half-steps up from the original tone. But after enough iterations, these ratios produce 12 different clusters of frequencies.

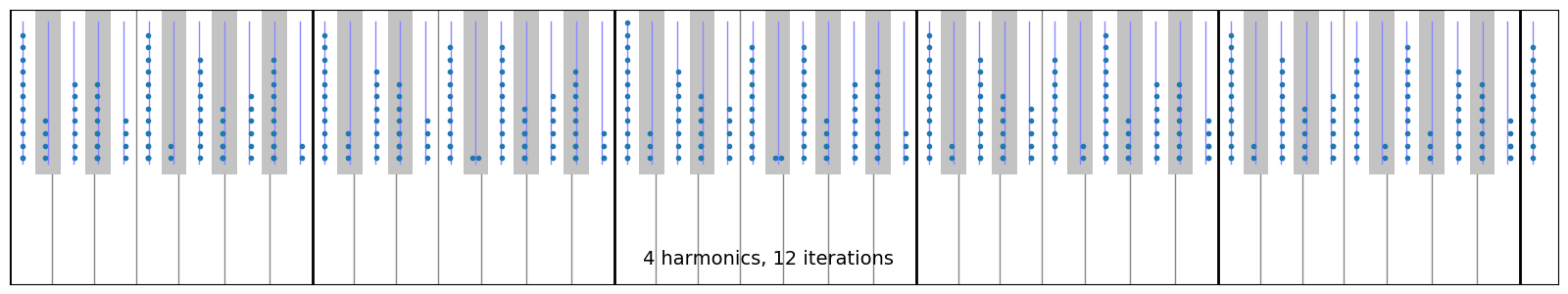

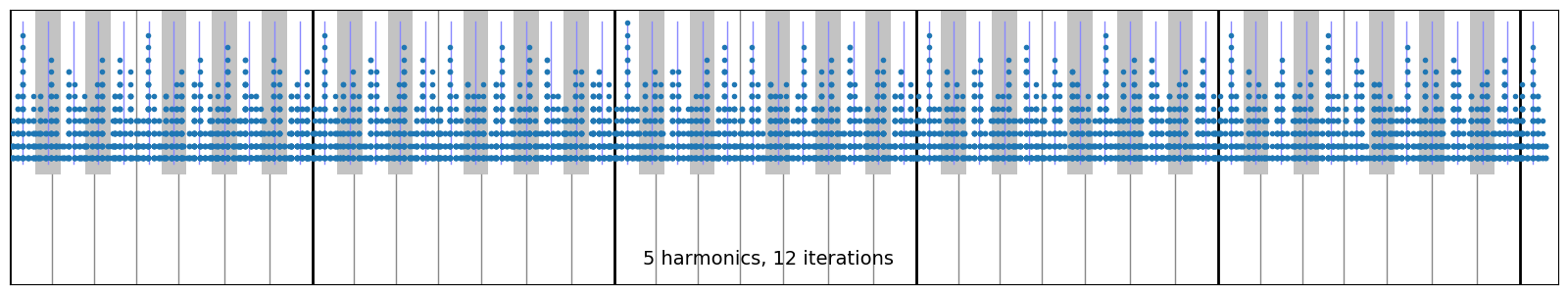

Add one more harmonic, and the ratios are 1/4, 1/3, 1/2, 1, 2, 3, and 4.

This converges to the same results as last time, but it takes fewer iterations to get there. At the end, the numbers land in 12 different clusters. This is why the common scale has 12 notes per octave.

In general, the higher up the dots on a given key go, the more harmonic it sounds when played together with middle C. The lower the dots start, the more dissonant it sounds compared to middle C.

When starting on middle C, the least harmonic notes (the ones where the dots are lowest) are: F#, C#, and B. B and C# make sense because they're only one half-step away, and that means the frequencies have a relatively long cycle before they line up.

And then there's F#. This is called a tritone, the "devil's interval", because from C to F# is the most dissonant interval on the piano. Also, it has two dots next to each other... because there are two similar perfect ratios which have the same degree of harmonic qualities. So really, there are two different F# notes, and both are equally valid within the scale. It just depends on which other notes you pair it with. But that gets complicated and confusing pretty quickly, so the scale instead uses a single note exactly halfway between. This makes it sound even more dissonant.

Add in one more harmonic, and then things turn into a huge mess.

Almost nobody writes music this way, because it's too complicated and usually sounds bad.

Even microtonal musicians try to stay toward the top of the graph, where there are fewer dots... because lower down, it's easier to just calculate the ratios manually than it is to deal with hundreds or thousands of notes in a single octave. May as well just use totally free tuning at that point.

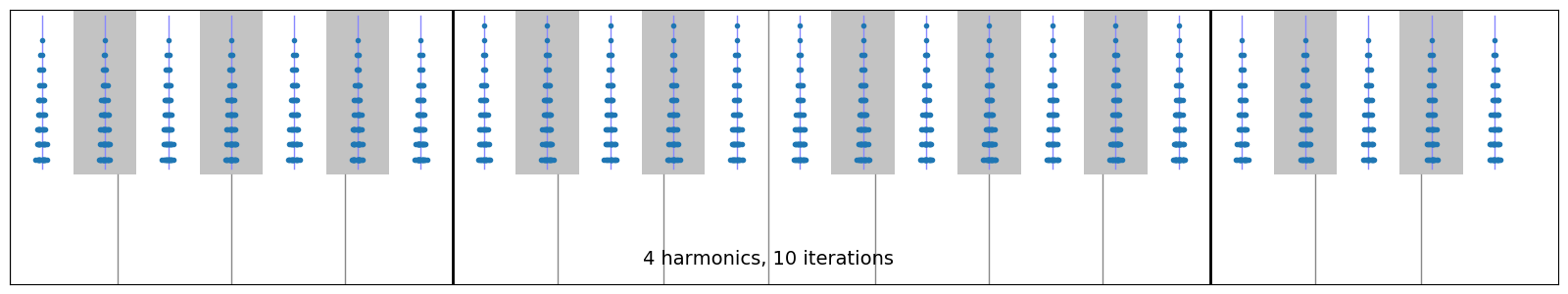

You may have noticed that the notes aren't perfectly aligned. Here's a closer look.

The blue line in each note represents "equal temperament", or what happens when we space 12 frequencies as evenly as possible throughout the octave. This is used as a common tuning for many instruments because, even though it's not perfect, it's really close... and then it doesn't matter what scale or key you play in. They're all more or less equally in tune (or equally out of tune).

The blue dots, of course, represent perfect intervals relative to the original note of "C".

Sometimes musicians on fretless instruments bend each note slightly up or down to get closer to a perfect interval. Sometimes people tune their instruments to a specific key, to align everything with the dots above... so it sounds better when played in that key, but worse when played in any other key. And sometimes people play microtonal instruments so they can get a perfect and exact pitch every time no matter what key they're playing in. But that requires a really good ear, and it places extra restrictions on composition... and some listeners may think it sounds out of tune because they're accustomed to the evenly-spaced scale.

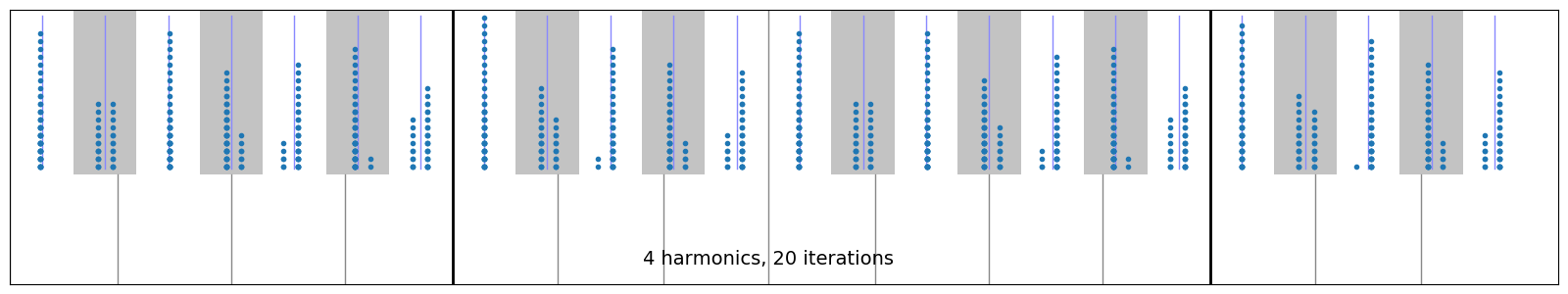

Zooming in even further, and running the algorithm for more iterations, it becomes clear that most notes have two possible frequencies for perfect intervals... but one is usually more dominant, and the other is only used on rare occasions. Mostly, the choice of which one is better seems to depend on which direction it's approached from, like from above or below, and from how far away.

Or, if we use all 12 equal-temperament notes as a starting point instead of just "C", here's how the result looks. It's like the previous image, but with 12 slightly-offset copies all overlaid onto the same graph:

Overall though, the slight detuning isn't usually a problem. Rather, I'd say the slight detuning is actually more of a feature than a bug. Here's why:

Many of the most prized instruments are old retro analog synthesizers. The reasons people give for this are that they sound "warm" or have "character". What that really means, though... is that they have a lot of imperfections. The notes they play are all a little bit skewed, a little bit "off". And it sounds fantastic.

Meanwhile, musicians tend to avoid instruments which sound "digital". They describe the sound as "cold" or "lifeless". Because on early digital synths the notes were all perfect, exactly in tune, and exactly the same each time.

To improve their aesthetic qualities, digital synths (and even newer analog synths) have had to implement intentional imperfections, often referred to as "analog feel". This is done by de-tuning each note a little, usually either randomly or in a manner which emulates an old poly synth with a fixed number of imperfect voice circuits played round-robin style.

There are also synthesizers which use FM (frequency modulation) techniques, or which use additive synthesis by combining perfect intervals in the harmonic series. In both cases, they usually sound very clean and crisp when the intervals are perfect, but they turn into a dissonant mess when the intervals are even slightly "off". So in these cases, digital perfection isn't just good... it's virtually required. But that's only for the frequencies within a single note.

I have a theory about why the imperfection is desirable sometimes and not at other times:

- Perfect intervals are interpreted by the ear as being parts of a single note. The overtones and undertones are interpreted as "timbre", or the overall character of the instrument.

- Imperfect intervals are interpreted by the ear as being separate notes. Play a few at once, and instead of changing the timbre, it makes a chord.