Package Set |

||

Quantity |

Debian has a lot of packages. Let me say that again. Debian has a

lot of packages. The only Linux distro which can compete on these

grounds, at all, is Gentoo.

Let me give some details. On my debian desktop, I asked APT for some stats about its database (

For comparison, I asked CentOS 3 for roughly the same information:

Why does this matter?

Most distros carry most of the really important or popular packages, right? They all have the kernel, X11, apache, openoffice, bind, and pretty much all the other major programs. But many people will end up wanting at least one or two "obscure" packages. Here are a few of the packages I use which you won't find in the latest fedora core (4):

Looking again, at the extras repository, I managed to find XMMS and BitTorrent. But the rest are all missing. And I was looking for packages which, for the most part, are well-known, popular, and interesting to a large audience. Chances are that you won't find everything you're looking for in a Red Hat-based distro.

Let me give some details. On my debian desktop, I asked APT for some stats about its database (

apt-cache stats). It claims to know of

23533 packages, including 28382 distinct versions. The

debian project

only claims a modest 15490 packages, but that's a low estimate.

For comparison, I asked CentOS 3 for roughly the same information:

yum list displays just 680 packages. On another Red Hat-based

system, it claims to provide 1199 packages. CentOS 4.1 has 1406 (or 1538

with the "plus" and "extras" enabled), which is just 6% to 6.5% the size of

debian. And on the newest fedora, it may even have as many as 2000. But

that's a far, far cry from 23000+. Over 90% of the packages are missing!

Why does this matter?

Most distros carry most of the really important or popular packages, right? They all have the kernel, X11, apache, openoffice, bind, and pretty much all the other major programs. But many people will end up wanting at least one or two "obscure" packages. Here are a few of the packages I use which you won't find in the latest fedora core (4):

- Ardour: professional sound editor.

- Sweep: DJ's sound editor.

- CheeseTracker: music composer.

- PovRay: 3D render.

- MPlayer: audio/video player and swiss-army multimedia tool.

- VLC: audio/video player (VideoLan Client).

- Video file manipulation tools: mkvtoolnix, ogmtools.

- MP3 players: madplay, mpg321, mpg123.

- Other audio-format players: xmp, festalon, etc.

- Games and game emulators: Abuse, Pingus, FCEU, Zsnes, SNES9x, xdemineur, Frozen Bubble, Armagetron, GL-Tron, etc...

- (X)MAME, the arcade machine emulator.

- JsCalibrator, for calibrating joysticks.

- XKBD, an onscreen keyboard for tablet/PDA input.

- BitchX, popular IRC client.

- DircProxy, the detachable IRC proxy.

- PyGame and Python-OpenGL: SDL and OpenGL support for Python.

- Xaos, the fast fractal zoomer.

- The Enlightentment desktop, and friends (Eterm, etc).

- Window managers in general: sawfish, FVWM, IceWM, pwm, ratpoison, Matchbox, ...

- Exiftran, for automatically processing your digital camera photos.

- BitTorrent, BitTornado, etc.

- Alien, for converting and installing packages from other distros.

- Checkinstall, for auto-packaging from source.

- FakeRoot, for safely building packages as a regular user.

- PwGen, the password generator.

- A dictionary. (dict-gcide, dict-wn)

- A thesaurus. (dict-moby-thesaurus)

- QEMU, the fast computer emulator.

- DOSEMU; DOS emulator.

- x11vnc, for making the current desktop available over the network.

- TightVNC viewers/server; a more efficient remote desktop solution.

- x2x, which allows the user to control multiple computers with one keyboard/mouse.

- xzoom, which enlarges portions of the screen.

- Intelligent auto-DJ for XMMS (gjay), visualizer plugin (goom), or even the basic XMMS package.

- Ability to record the output of audio programs (vsound).

- SmokePing; monitors network response times.

- EtterCap; gives detailed network traffic analysis.

- ModLogAn, for summarizing Apache (and other) log files.

- WebMin, the popular web-based administration suite.

- PhpMyAdmin, a powerful web-based front end to MySQL.

- GDesklets, providing useful and pretty desktop widgets.

- RadeonTool, NVidia Source, and other accelerated video support packages.

- Courier, the easy and fast IMAP/POP/SMTP server.

- ... and many others.

Looking again, at the extras repository, I managed to find XMMS and BitTorrent. But the rest are all missing. And I was looking for packages which, for the most part, are well-known, popular, and interesting to a large audience. Chances are that you won't find everything you're looking for in a Red Hat-based distro.

Quality |

Debian has over a thousand package maintainers. I hear it may be nearly

two thousand by now, though I don't know the exact number. That means each

maintainer is responsible for anywhere from 8 to 24 packages, on average.

The packages are maintained by people who care about the specific software

they provide, and usually are experts on what they package. In many cases,

the maintainers make significant improvements from the upstream versions,

which usually find their way back to the author eventually.

Red Hat systems usually have a much smaller package team -- anywhere from one person to a few dozen. Each maintainer is responsible, on average, for anywhere from a few dozen to a few hundred packages. Many Red Hat-based distros simply recompile the SRPMs every six months when Red Hat makes a new release, without really checking the package quality at all -- even to see if the packages are broken. This all tends to result in lower overall package quality. (sometimes even Red Hat doesn't check whether its packages work... for example, the broken gcc in RH7.0)

It's also worth mentioning the packaging guidelines in Debian. Packages must meet certain criteria, which creates a high degree of consistency. I won't get into detail just now, but for example, you can find documentation on any package by looking in /usr/share/doc/package-name .

Red Hat systems usually have a much smaller package team -- anywhere from one person to a few dozen. Each maintainer is responsible, on average, for anywhere from a few dozen to a few hundred packages. Many Red Hat-based distros simply recompile the SRPMs every six months when Red Hat makes a new release, without really checking the package quality at all -- even to see if the packages are broken. This all tends to result in lower overall package quality. (sometimes even Red Hat doesn't check whether its packages work... for example, the broken gcc in RH7.0)

It's also worth mentioning the packaging guidelines in Debian. Packages must meet certain criteria, which creates a high degree of consistency. I won't get into detail just now, but for example, you can find documentation on any package by looking in /usr/share/doc/package-name .

Feeds vs Releases |

||

I haven't reinstalled the OS on my desktop computer since 1997, when I first

installed Debian. And yes, it's still up to date -- running a kernel which

is, as I write this, 34 days old, plus the latest versions of nearly

everything else: X11, Firefox, GIMP, Apache, and so on.

Instead of asking yourself "when do I want to schedule a massive upgrade?", Debian lets you simply decide easier things like "how cutting-edge do I want to be?" and "do I feel like upgrading Bind today?".

Instead of asking yourself "when do I want to schedule a massive upgrade?", Debian lets you simply decide easier things like "how cutting-edge do I want to be?" and "do I feel like upgrading Bind today?".

Upgrade Patterns |

Software is a continuously-evolving field; a moving target, as it were.

Software is released or updated every day, and Debian reflects this by

releasing new and updated packages daily. You don't have to wait 6 months

until the next release, so you can upgrade whenever you want to.

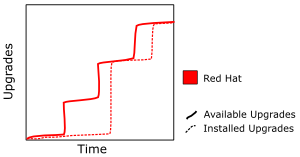

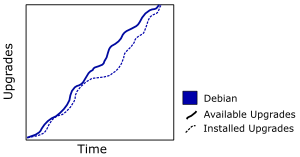

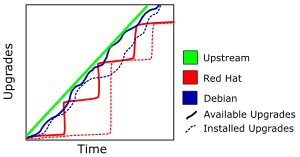

Let me put it a different way... Red Hat uses a "stair step" upgrade pattern, and Debian uses a "smooth slope" pattern. Here are some diagrams to show what I mean.

By using a smooth upgrade process, you never have to take the Red Hat "leap

of faith" where you upgrade everything all at once (or rebuild/reinstall),

and hope everything still works afterward.

This translates into serious cost savings because it avoids the need to rebuild servers every year. Just update small pieces now and then whenever it's appropriate to do so, and the server will keep running indefinitely. You can generally even do complete hardware upgrades without having to reinstall the software. Just move the old hard drive into the new machine, or copy its contents verbatim, and you're done.

Let me put it a different way... Red Hat uses a "stair step" upgrade pattern, and Debian uses a "smooth slope" pattern. Here are some diagrams to show what I mean.

| The Red Hat upgrade pattern is much like a flight of stairs or series of cliffs. Each step, six months apart, brings major upgrades, but there is little activity between releases. Many people opt to skip entire release cycles, to avoid the hassle of having to reinstall everything, and end up pretty far behind. |

|

The Debian testing and/or unstable (see

below) upgrade pattern is a fairly smooth curve, with new packages

coming out daily. Users may upgrade whenever they like, as much or as

little as desired, and in a smooth manner which avoids the need to

perform traumatic rebuilds or reinstalls.

|

| The two graphs combined reflect some other details. Both distros are always just a little behind the upstream developers, since it takes time to package and test new software. Red Hat tends to be a little bit newer when a new Fedora release comes out, but Debian is usually more up-to-date the rest of the time. |

This translates into serious cost savings because it avoids the need to rebuild servers every year. Just update small pieces now and then whenever it's appropriate to do so, and the server will keep running indefinitely. You can generally even do complete hardware upgrades without having to reinstall the software. Just move the old hard drive into the new machine, or copy its contents verbatim, and you're done.

Stable, Testing, Unstable |

Feeds also offer the ability to choose just how safe or bleeding-edge you

want to be. Packages filter through three different feeds, called

unstable, testing, and stable.

There is also an additional feed called experimental, for the

truly adventurous.

- The

stablefeed is very similar to a release of Red Hat Enterprise Linux. It's rock-solid, and maintained with security updates for quite some time after it's released. However,stableis not released very often, and uses a stair-step upgrade pattern. - So, for something more up to date, users can add the

unstablefeed to their apt sources. This is where new packages usually go, so you can always have the latest software available. And despite the name, it's actually quite stable. Things don't break in unstable... often. - Most people are better off using the

testingfeed. Don't let the name fool you -- it would be more appropriate to call it thetestedfeed, since it's where packages go after they have been tested and deemed safe. It's nearly as up-to-date asunstablemost of the time, but much safer. - If, for some reason, there's a brand-new piece of software you

absolutely must have, and it's not in

unstableyet, you can use theexperimentalfeed. This is almost never necessary, though.

stable and testing on all my

systems, and make unstable and experimental

available only by explicit request (a.k.a. "apt-pinning"). I also have a

few 3rd-party feeds set up for the rare packages here and there which

aren't actually in Debian yet.

Package Manager |

||

Package Configuration |

Debian incorporates configuration into the package-installation process.

Red Hat decided that automatic, hands-free installation was preferable.

Which one is really better is debatable, but I find the configuration

step in Debian to be a useful and valuable idea. Many packages provide

a simple, modular, easy, menu-driven configurator -- built right into the

package. So, during the install, it asks you questions about anything it

thinks is "important enough" to be worth asking. (you can set a preference

for the level of importance beforehand) And if you change your mind later,

you can "dpkg-reconfigure mypackage" to change the settings.

This provides a few benefits:

This provides a few benefits:

- The user doesn't have to learn dozens of different configuration tools and file formats, and the quirks of each.

- Preferences chosen in the menus can be automatically applied to updates, even if the underlying config format changes. This means you don't have to answer the same questions over and over, and don't have to care if the config file changes to a different language.

- Unimportant settings can be skipped automatically, so the novice user (or busy user) doesn't have to care about them.

- Every package uses the same user-friendly interface for configuration. (well, many packages, anyway)

Config File Handling |

Debian keeps track of which files are configuration and which are simply

data or executables. The upshot of this is how it handles upgrades. It

can automatically upgrade config files you haven't touched, but will detect

when you have changed something and ask you how to handle it. Any time a

custom configuration is detected, you get the following choices:

- use your version (but keep a copy of the new version if you need it)

- use the new default version (but keep a copy of your custom version)

- see how the two versions are different

- manually resolve the differences

Overall Maturity |

The Fedora project shows a lot of potential. It's a step in the right

direction for Red Hat. In many ways, it's a partial clone of Debian. But

it's not very mature yet. Debian has been around, running itself via its

fully-democratic self-government, since the early days of Linux -- and it

has become a very stable and mature system.

Similarly, APT has been working rather well for nearly a decade. OTOH, Red Hat's package manager (Yum) is still a rather new and unsophisticated project. The latest Yum still hasn't caught up to where apt was 5 years ago.

Similarly, APT has been working rather well for nearly a decade. OTOH, Red Hat's package manager (Yum) is still a rather new and unsophisticated project. The latest Yum still hasn't caught up to where apt was 5 years ago.

Speed |

I can't say I'm completely happy with the speed APT runs at. But I can say

that it's much faster than Yum. And by "much", I mean anywhere from

50 to 500 times faster. I did some basic tests for things like listing the

number of packages, searching for a keyword, and deciding what upgrades are

available... and apt gave me results in a matter of 0 to 3 seconds, while

yum took about 30 to 90 seconds for the same operations, on a machine with

much faster hardware.

Part of this is because Yum insists on doing the equivalent of "apt-get update" before every operation. And yes, I tried the command line option to disable that. It didn't make any difference -- apparently that option is still broken. But Yum is also generally just slower, taking over a minute (after the package list update) to complete operations which took apt just a couple seconds.

I also found that the output of apt made me faster. It prints results in a much more concise, readable form than Yum. And APT's ability to answer questions in just a second or two really helps me work with the system rather than fighting against it.

Update 2006-03-21: Fedora Core 5 was just released, and I hear that yum has gotten significantly faster. It now takes only 7-14 seconds to run things such as "yum info glibc". The same operation in Debian, "apt-cache show libc6", takes 0.01s on my aging desktop system. APT in this case is still ~1000 times faster, but 7-14 seconds really isn't bad.

However, the new graphical package manager in FC5 is apparently very slow. It took 45 minutes to load the dependencies on a Pentium 4, compared to 5-6 seconds to do the same thing in Synaptic on Ubuntu on a Pentium 3. (that's even worse, considering that FC5 has only 2200 (+2200 extras) packages, or about 20% as many as what "apt-cache stats" lists on Ubuntu) By my rough estimations, the FC5 package GUI takes 4500 times longer to start than Ubuntu's package GUI (450 times as long * 5X fewer packages * 2X faster CPU).

Part of this is because Yum insists on doing the equivalent of "apt-get update" before every operation. And yes, I tried the command line option to disable that. It didn't make any difference -- apparently that option is still broken. But Yum is also generally just slower, taking over a minute (after the package list update) to complete operations which took apt just a couple seconds.

I also found that the output of apt made me faster. It prints results in a much more concise, readable form than Yum. And APT's ability to answer questions in just a second or two really helps me work with the system rather than fighting against it.

Update 2006-03-21: Fedora Core 5 was just released, and I hear that yum has gotten significantly faster. It now takes only 7-14 seconds to run things such as "yum info glibc". The same operation in Debian, "apt-cache show libc6", takes 0.01s on my aging desktop system. APT in this case is still ~1000 times faster, but 7-14 seconds really isn't bad.

However, the new graphical package manager in FC5 is apparently very slow. It took 45 minutes to load the dependencies on a Pentium 4, compared to 5-6 seconds to do the same thing in Synaptic on Ubuntu on a Pentium 3. (that's even worse, considering that FC5 has only 2200 (+2200 extras) packages, or about 20% as many as what "apt-cache stats" lists on Ubuntu) By my rough estimations, the FC5 package GUI takes 4500 times longer to start than Ubuntu's package GUI (450 times as long * 5X fewer packages * 2X faster CPU).

Custom Distros |

||

Looking briefly at DistroWatch, I

notice that five of the top ten distros are based on Debian. Two or three

are based on Red Hat (depending on whether SuSE counts), and the remaining

two are Slackware and Gentoo. Why are so many successful distros based on

debian?

The answer, I think, is that Debian is designed to make derivative products easy to make. It's currently the most suitable base for custom distros. It has a relatively tiny base system, an extremely flexible design, and all its distro-building tools are freely available. All the processes for building Debian are a matter of public knowledge, with most of the communication also executed in public channels. In short, Debian applies the open source philosophy to building a distro.

It takes a lot of time and effort to make a Linux distribution. Making one from scratch requires ungodly amounts of time and effort, unless you're making a very small distro. According to a recent study, Debian 3.1 ("Sarge") consists of about 230 million source lines of code, with an estimated 60,000 person-years and $8 billion USD redevelopment cost. This makes it, by a wide margin, the single largest software project ever created.

To be fair, the Debian developers didn't write most of that code. They merely organize, clean, patch, package, and distribute it... and write code to make the system work better. However, managing a repository of practically all free software in the world is no easy task. It takes nearly 2000 people to keep Debian up-to-date. This is why Fedora has less than 10% as many packages as Debian -- Fedora simply does not have enough package maintainers to compete.

The answer, I think, is that Debian is designed to make derivative products easy to make. It's currently the most suitable base for custom distros. It has a relatively tiny base system, an extremely flexible design, and all its distro-building tools are freely available. All the processes for building Debian are a matter of public knowledge, with most of the communication also executed in public channels. In short, Debian applies the open source philosophy to building a distro.

It takes a lot of time and effort to make a Linux distribution. Making one from scratch requires ungodly amounts of time and effort, unless you're making a very small distro. According to a recent study, Debian 3.1 ("Sarge") consists of about 230 million source lines of code, with an estimated 60,000 person-years and $8 billion USD redevelopment cost. This makes it, by a wide margin, the single largest software project ever created.

To be fair, the Debian developers didn't write most of that code. They merely organize, clean, patch, package, and distribute it... and write code to make the system work better. However, managing a repository of practically all free software in the world is no easy task. It takes nearly 2000 people to keep Debian up-to-date. This is why Fedora has less than 10% as many packages as Debian -- Fedora simply does not have enough package maintainers to compete.

Other, misc |

||

Many little details about Red Hat systems bother me. Many of these seem to

be half-cloned features from other OSes or distros, not implemented as well

as the original. I already mentioned Yum compared to APT, but there are

other similar examples. Red Hat still encourages the use of

Packages are named inconsistently, or in an overly generic manner -- for example, "named" and "httpd" instead of "bind9" and "apache2". What if you wanted some other server, like nsd or boa? Debian provides generic packages too, but only for convenience. They merely depend on the real package -- the equivalent of symlinks, except for packages.

Kudzu. Yuck. Servers should not fail to boot because the mouse is unplugged. (I hear this behavior is fixed now, as of late 2005.)

Package (file)names... Debian uses name_version_arch.ext, which is simple, consistent, and easy to parse. Red Hat's names are ambiguous -- they use dashes both in the package name and to separate the name from the version. This problem is complicated because some packages include a version in their names, and then have an additional version after an ambiguous dash.

Custom kernels, especially in RHEL... The special patches applied tend to break things. For example, a "feature" which introduces resource starvation into the kernel... on purpose. Because someone at Red Hat thought they knew better than the Linux community. The end result is that the RHEL kernel does weird things like kill init instead of dumping old cached data when it needs more memory.

Another break-the-kernel patch introduced serious scheduling bugs into the Red Hat kernel around v2.4.20: a waiting process would sometimes not be scheduled to run for exceptionally long periods of time. This causes all sorts of problems, like causing heartbeat to think it's dead and take down the machine it's running on -- the opposite of what high-availability software is supposed to do.

rc.local, which seems like a bad idea. It uses somewhat

Debian-like ifup and ifdown commands to manage

network interfaces, but the workings of those commands are lacking. The

configuration, in particular, is overcomplicated for no reason. Red Hat

still doesn't have its SysVInit scripts in the standard location, though it

at least has symlinks to them in the right spot.

Packages are named inconsistently, or in an overly generic manner -- for example, "named" and "httpd" instead of "bind9" and "apache2". What if you wanted some other server, like nsd or boa? Debian provides generic packages too, but only for convenience. They merely depend on the real package -- the equivalent of symlinks, except for packages.

Kudzu. Yuck. Servers should not fail to boot because the mouse is unplugged. (I hear this behavior is fixed now, as of late 2005.)

Package (file)names... Debian uses name_version_arch.ext, which is simple, consistent, and easy to parse. Red Hat's names are ambiguous -- they use dashes both in the package name and to separate the name from the version. This problem is complicated because some packages include a version in their names, and then have an additional version after an ambiguous dash.

Custom kernels, especially in RHEL... The special patches applied tend to break things. For example, a "feature" which introduces resource starvation into the kernel... on purpose. Because someone at Red Hat thought they knew better than the Linux community. The end result is that the RHEL kernel does weird things like kill init instead of dumping old cached data when it needs more memory.

Another break-the-kernel patch introduced serious scheduling bugs into the Red Hat kernel around v2.4.20: a waiting process would sometimes not be scheduled to run for exceptionally long periods of time. This causes all sorts of problems, like causing heartbeat to think it's dead and take down the machine it's running on -- the opposite of what high-availability software is supposed to do.

Dependencies |

In Red Hat, it's standard practice to use file dependencies in

addition to (or sometimes instead of) regular package dependencies. The

only practical benefit of this that I have been able to find is it slightly

eases the creation of cross-distro packages. Three different Red Hat-based

distros may have three different names for a package. So, if you want to

create your own RPM which works on all three, it's easier to check for a

file you know that other package has than to check for the name of the

package.

Coming from a debian background, the idea of cross-distro packages seems rather odd to me. But 3rd-party packages are a necessity in the Red Hat world, since the main package set is so tiny. Red Hat simply does not provide enough packages to make up a complete system. And there are so many different flavors of Red Hat that it would be infeasible for 3rd-party package authors to make different packages for each flavor.

So, people use file dependencies. It may defeat the point of having a package namespace, but it is an effective kludge to work around the lack of standards between related distros. And having semi-broken software is arguably better than having no software at all.

Otherwise, file dependencies just cause problems.

Coming from a debian background, the idea of cross-distro packages seems rather odd to me. But 3rd-party packages are a necessity in the Red Hat world, since the main package set is so tiny. Red Hat simply does not provide enough packages to make up a complete system. And there are so many different flavors of Red Hat that it would be infeasible for 3rd-party package authors to make different packages for each flavor.

So, people use file dependencies. It may defeat the point of having a package namespace, but it is an effective kludge to work around the lack of standards between related distros. And having semi-broken software is arguably better than having no software at all.

Otherwise, file dependencies just cause problems.

Gentoo |

||

Just a quick note or two about Gentoo...

Debian has a lot in common with Gentoo. Both have similar advantages in terms of available packages, upgrade mechanisms, and general choice. If anything, Gentoo offers even more choice than Debian, or perhaps inflicts more choice. And that's a good thing, if you know what you're doing. But I wouldn't recommend it for anyone who isn't very technical or detail-oriented.

Gentoo also has some drawbacks, compared to debian. The main drawback is compiling... especially on older or slower machines. It can take a week to get a full Gentoo desktop system built. It may run a bit faster afterward, but the extra speed costs time.

If you've got the time, and especially if you've got an affinity for the bleeding edge, Gentoo is a great distro. For most people though, a Debian-based distro like Ubuntu is probably a better idea.

Debian has a lot in common with Gentoo. Both have similar advantages in terms of available packages, upgrade mechanisms, and general choice. If anything, Gentoo offers even more choice than Debian, or perhaps inflicts more choice. And that's a good thing, if you know what you're doing. But I wouldn't recommend it for anyone who isn't very technical or detail-oriented.

Gentoo also has some drawbacks, compared to debian. The main drawback is compiling... especially on older or slower machines. It can take a week to get a full Gentoo desktop system built. It may run a bit faster afterward, but the extra speed costs time.

If you've got the time, and especially if you've got an affinity for the bleeding edge, Gentoo is a great distro. For most people though, a Debian-based distro like Ubuntu is probably a better idea.